Apache Kafka is a distributed event-streaming open-source platform widely used by thousands of companies worldwide. This tool allows us to build event-streaming-based applications. In this article, I will explain how to setup a Kafka Cluster with a Kafka UI where you can manage the topics and subscribers using a Docker compose script.

What is Kafka?

Kafka is an open-source distributed event-streaming platform that is used to build real-time data pipeline applications. It works in a publish-subscribe (pub-sub) model. It handles high-throughput, low-latency and fault-tolerant events transmission from sender to receiver.

Key components of Kafka

Here are some key components of the Kafka tool.

- Producer: it sends messages to the Kafka topic.

- Consumer: it receives messages from a Kafka topic.

- Broker: a server that handles the messages and handles producers and consumers.

- Topic: a kind of category where messages are sent by the producer.

- Partition: a virtual copy of the topic that can distribute load and improve performance.

- Offset: a unique identifier for each message within a partition.

Setup Kafka and Kafka UI using Docker Compose

Now that we have some understanding of the Kafka tool and how it works. Let’s start setting it up using a docker-compose script.

Take a look at various ways to improve a website speed using AWS CloudFront.

version: "3"

services:

zookeeper:

image: wurstmeister/zookeeper:3.4.6

ports:

- "2181:2181"

kafka:

image: wurstmeister/kafka:latest

ports:

- "9092:9092"

environment:

KAFKA_ADVERTISED_LISTENERS: INSIDE://kafka:9092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: INSIDE

KAFKA_LISTENERS: INSIDE://0.0.0.0:9092

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

depends_on:

- zookeeper

kafka-ui:

image: provectuslabs/kafka-ui

ports:

- "8080:8080"

environment:

KAFKA_CLUSTERS_0_NAME: "local"

KAFKA_CLUSTERS_0_BOOTSTRAP_SERVERS: "kafka:9092"A docker-compose script that sets up a Kafka cluster with Zookeeper and an interface for the Kafka cluster where you can manage topics, messages etc.

It defines three docker containers- 1) kafka, 2) zookeeper and 3) kafka-ui.

Zookeeper

zookeeper:

image: wurstmeister/zookeeper:3.4.6

ports:

- "2181:2181"Kafka uses Zookeeper to store and manage metadata like connected client information, distributed state etc.

Kafka broker

kafka:

image: wurstmeister/kafka:latest

ports:

- "9092:9092"

environment:

KAFKA_ADVERTISED_LISTENERS: INSIDE://kafka:9092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: INSIDE

KAFKA_LISTENERS: INSIDE://0.0.0.0:9092

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

depends_on:

- zookeeperIt sets up a Kafka broker to handle message producers and consumers. The script uses the latest version of wurstmeister/kafka image. It also defines a few basic environment variables that are used during the run.

Kafka UI

kafka-ui:

image: provectuslabs/kafka-ui

ports:

- "8080:8080"

environment:

KAFKA_CLUSTERS_0_NAME: "local"

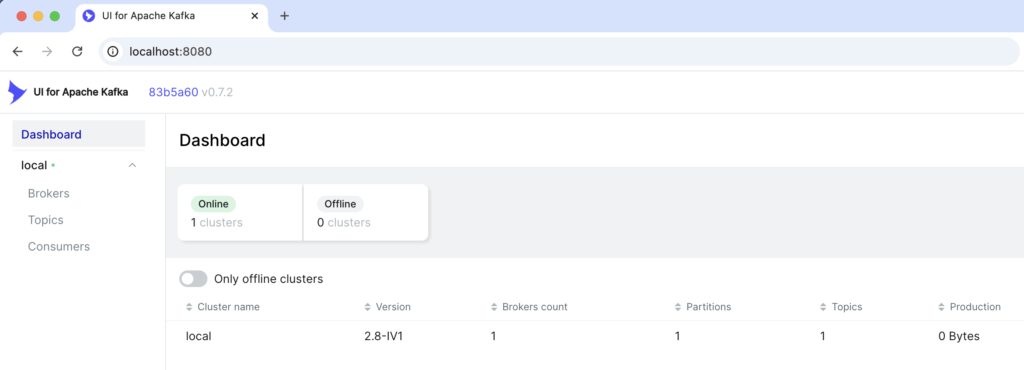

KAFKA_CLUSTERS_0_BOOTSTRAP_SERVERS: "kafka:9092"It provides a web interface to interact with Kafka broker. You can manage topics, partitions, messages etc. It connects with Kafka broker through container name and port kafka:9092.

How to run

Now that you have prepared a docker-compose script to setup the Kafka cluster and UI, let’s start the containers.

Run the following docker command to start containers and run in the background.

docker compose up -dWait for the command to execute properly. You should see running messages on the terminal for all three services.

Open the url http://localhost:8080 in your browser.

You can explore various pages like Brokers and Topics to create and manage topics, partitions etc in Kafka.

Conclusion

The article covers what is Kafka, what are some basic components and how to setup a Kafka cluster with UI using a docker compose script.

In the next article, I will explain how to interact with a Kafka broker using producer and consumer.

Write your queries in the comment section below!